Well, the voters of Alabama selected a Democrat rather than an alleged child molester to be their senator in the special election of 12-12-17. That's a good thing, but there's a downside.

They only voted that way by around a percent or so margin. That means very nearly half the voters in Alabama that day actually preferred the child molester to represent them, just for the political party advantage.

When the voters are so deluded by party propaganda as to effectively have no ethics, then why is it a surprise that so many politicians are similarly detestable?

Wednesday, December 13, 2017

Thursday, November 23, 2017

A Better Version of the MCP Space Suit?

This is a concept proposal for a better version of the

mechanical counter-pressure (MCP) space suit.

It combines the best features and eliminates the worst disadvantages of

the particular two MCP design approaches upon which it is based. These are the “partial pressure” suit of the

1950’s and the “elastic space leotard” of Dr. Paul Webb. The result should be a lightweight, supple (non-restrictive) suit that with

suitable unpressurized outerwear, can be

used on pretty much any planetary surface even if totally airless, or even in space. It need not use exotically-tailored

materials in its construction.

It should be relatively easy to doff and don.

This article updates earlier articles on this subject.

Those are:

Date title

2-15-16 Suits and

Atmospheres for Space (supersedes those

following)

1-15-16 Astronaut Facing Drowning Points

Out Need for Better Space Suit

11-17-14 Space Suit and Habitat Atmospheres

2-11-14 On-Orbit Repair and Assembly

Facility

1-21-11 Fundamental Design Criteria for

Alternative Space Suit Approaches

The idea here is to combine the two demonstrated approaches

that both apply the fundamental MCP principle:

the body needs pressure applied to its skin to counterbalance the

necessary breathing gas pressure. The

body simply does not care whether this counter-pressure is applied as gas

pressure inside a gas balloon suit, or is

exerted upon the skin by mechanical means.

The first article cited in the list above (“Suits and

Atmospheres for Space” dated 2-15-16) determines that pure oxygen breathing gas

pressures from 0.18 atm to 0.25+ atm should be feasible. How that was calculated is not repeated

here. My preferred range of helmet

oxygen pressures is 0.18 to 0.20 atm,

for which wet in-lung oxygen partial pressures range from 0.11 to 0.13 atm, same as the wet in-lung oxygen partial

pressures in Earth’s atmosphere at altitudes between 10,000 and 14,000

feet.

However, only 0.26

atm gives you the same wet in-lung oxygen pressure as sea level Earth air. The 0.33 atm used by NASA is entirely unnecessary, unless to help overcome the exhaustive efforts

necessary to move or perform tasks, in

the extremely stiff and resistive,

heavy, and bulky “gas balloon”

suits they use.

The 1940’s design that operationally met the need for

extreme altitude protection for short periods of time was the “partial

pressure” suit of Figure 1, in which compression

was achieved with inflated “capstan tubes”.

These suits were widely used into the 1960’s. The capstans pulled the non-stretchable

fabric tight upon the torso and extremities.

This provided the counterpressure necessary for pressure-breathing

oxygen during exposures to vacuum or near vacuum, for durations up to about 10 minutes

long. This was for bailouts from above

70,000 feet, and would have worked for

similar short periods even in hard vacuum.

Hands and feet were left uncompressed,

but for only 10 minutes’ exposure,

these body parts could not begin to swell from vacuum effects.

The advantages of this design were (1) ease of doff and

don, (2) it was simple enough to be

quite reliable, and (3) it was not very

restrictive, whether the capstan tubes

were pressurized or not. The

disadvantages were the achievement of rather-uneven compression, and leaving the hands and feet completely uncompressed. This limited the allowable exposure time by

(1) uncompressed small body parts begin swelling in about 30 minutes, and (2) between the uncompressed parts and

the uneven compression achieved on the extremities, blood pooling into the under-compressed parts

could lead to fainting within about 10 to 15 minutes.

Figure 1 – Partial Pressure Suit Design Used From the late 1940’s to the Early 1960’s

In the late 1960’s,

Dr. Paul Webb performed striking experiments with an alternative way to

achieve mechanical counterpressure upon the body. He used multiple layers of elastic fabric

(the then-new panty hose material) as a tight-fitting leotard-like

garment. This was not a single-piece

garment. It achieved more-uniform

compression on the torso and extremities than did the older partial pressure

suit. Dr. Webb included elastic

compression gloves and booties, so that

the entire body was compressed, removing

the time limits. Breathing difficulties

were solved with a tidal volume breathing bag enclosed by an inelastic

jacket.

Breathing gas was pure oxygen at 190 mm Hg pressure (0.25

atm) fed into the helmet from a small backpack with a liquid oxygen Dewar for

makeup oxygen. This type of garment was very

unrestrictive of movement, and was

demonstrated quite adequate for near-vacuum exposures equivalent to 87,000 feet,

for durations up to 30 minutes. It was intended for possible application as

an Apollo moon suit, but could not be

made operationally ready in time. It has

been mostly forgotten ever since.

The advantages are very unrestricted movement, very light weight (85 pounds for suit plus

helmet plus oxygen backpack), and no

need for a cooling system: you just

sweat right through the porous garment,

same as ordinary street clothing.

Plus, the garment’s pieces were

quite launderable. Dr. Webb’s test rig

is shown in Figure 2. 6 or 7 layers of

the panty hose material provided adequate counter-pressure.

Figure 2 – Dr. Webb’s “Elastic Leotard” MCP Space Suit Prototype as Demonstrated

The disadvantages were essentially just difficult (time-consuming)

efforts to don and to doff the garment’s pieces, precisely because they were inherently very

tight-fitting. For use on a

planetary surface or out in space, one

treats the suit as “vacuum-protective underwear”, and adds insulating or otherwise protective non-pressurized

outerwear over it. So protection from

hazards is not a disadvantage at all, but

only if one uses the vacuum-protective underwear notion.

The main advantage of Dr. Webb’s “elastic space leotard”

over the “partial pressure” suit was the more even (and more complete)

compression achievable with the elastic fabrics. The main advantage of the “partial pressure”

suit over the “elastic space leotard” was the ease of donning and doffing the

garment, when the capstan tubes were

depressurized, releasing the fabric

tension. Both approaches offer very

significant advantages over the “gas balloon” suits in use since the 1960’s as

space suits: lighter, launderable,

and far, far more supple and

non-restrictive for the wearer.

That suggests combining both of the successful MCP design approaches (inflated

capstans and elastic fabrics) into a single mechanical counterpressure suit

design. The capstans apply and

relax the tension in the fabric which provides the counter-pressure on the body, and the elastic fabric makes the achievable

compression far more uniform. What is

required from a development standpoint is experimental determination of the

number of layers of elastic fabric required for each piece of the garment, in order to achieve the desired compression in

every piece.

If done this way,

there is no need for directionally-tailored stiffness properties in

specialty fabrics, the basis of Dr. Dava

Newman’s work with mechanical compression suits (see Figure 3). Ordinary commercial elastic fabrics and

ordinary commercial joining techniques can be used. In other words, pretty much anyone can build one of these

space suits!

Figure 3 – Dr. Dava Newman’s MCP Suit Based on

Directionally-Tailored Fabric Properties

So, the MCP suit proposed

here has certain key features (see list below).

It will resemble the old “partial pressure” suits, except that protective outerwear (insulated

coveralls, etc.) get worn over the compression

suit itself, and the helmet is likely a

clear bubble for visibility. There is an

oxygen backpack with a radio. There is

no need for any sort of cooling system.

Everything is easily cleaned or laundered free of dust, dirt, sweat, and similar contamination.

Key features list:

#1. Pressurized capstan tubes pull the elastic fabric tight

whenever the helmet oxygen is “on”, but

depressurize and slack the garment tension when helmet oxygen is “off”. The capstan tubes are just part of the oxygen

pressure breathing system. Slacking the

fabric tension makes doff and don far easier.

#2. The multi-piece garment is composed of multiple layers

of elastic fabric to provide the desired level of stiffness that will achieve

the desired level of compression in each piece of the garment. This depends upon both the shape of the

piece, and upon how much circumferential

shortening is achieved by inflating the capstan.

#3. The pressure garment is vacuum-protective

underwear, over which whatever

protective outerwear garments are worn that are appropriate to the task at

hand. For example, the wearer might need white insulated

coveralls and insulated hiking boots,

plus insulated gloves. One could

even add some sort of simple broad-brimmed hat to the helmet if sunlight were

intense.

#4. The clear bubble helmet is attached to the torso garment

piece. This torso garment piece also incorporates an inelastic jacket

surrounding a tidal volume breathing bag.

Helmet, breathing bag, and capstans all pressurize with oxygen from

the supply simultaneously, and are (in

fact) connected. All are activated by

one on/off control.

#5. The oxygen

backpack is just that, no cooling system

required. It probably uses liquid oxygen

from a Dewar as make-up oxygen, has

regeneratable carbon dioxide absorption canisters, and a battery-powered radio. It might also contain a drinking water feed

connected to the helmet. Attitude and

translation thrusters for free flight in space can be a separate chair-like

unit, and this function is entirely unnecessary

on a planetary surface.

#6. For concave body surfaces and complex shapes like

genitalia, the pressure suit can

incorporate semi-fluid gel packs that surround these body parts, making the body effectively convex

everywhere.

Figure 4 – How the Capstans and Elastic Fabric Work Together

for an Improved MCP Suit

About the only caveat might be that the breathing gas pressure could

be too small to also serve as the capstan inflation pressure. If that should prove to be true, then there need to be two final pressure

regulators in the oxygen backpack,

instead of just one. That problem

can be easily solved!

Monday, October 23, 2017

Reverse-Engineering the ITS/Second Stage of the Spacex BFR/ITS System

Update 4-17-18: since writing this article, I have gone to the Spacex website, where the 2017 presentation materials and more are posted about this design. I have re-visited my reverse-engineering of the capabilities of this vehicle in greater detail with greater fidelity to reality, in all its complexity. I have posted that new, improved analysis as "Reverse-Engineering the 2017 Version of the Spacex BFR", dated 4-17-2018. I do recommend that readers use that newer analysis article, rather than this one.

----------------------------------------------------------------

The “giant Mars rocket” proposed by Spacex has reduced in

size somewhat since its first reveal at the Guadalajara meeting. The term “BFR” is now beginning to refer to the

first stage of the two-stage system,

which flies back and lands for reuse.

The term “ITS” more properly applies to the reusable second stage, which apparently has two forms. Those are the cargo/passenger craft that goes

to destination after refilling on-orbit,

and a flyback tanker that provides the refill propellants on-orbit.

Data published by Spacex at the latest meeting indicate a cargo/passenger

vehicle that summarizes as given in Figure 1. Grossly, this is a 9 m diameter vehicle about 48 m

long, with a dry mass of about 85 metric

tons, and propellant tankage that holds

about 240 metric tons of liquid methane and 860 metric tons of liquid

oxygen. Stated payload weights are 150 metric

tons on ascent (and presumably to destination), and “typically” 50 metric tons

on return. Characteristics of the tanker

form are less clear, but it seemingly

has a lighter dry weight of about 50 metric tons.

Figure 1 -- Estimated Characteristics of ITS Per 2017 Revelations

These two versions presumably share the same ascent propellant

tankage and engine cluster. Those

engines include both sea level and vacuum expansion forms of the same Raptor

engine, with a nominal chamber pressure

of 250 bar, and deeply-throttleable to

20% thrust. The cluster has 4 vacuum

engines of 1900 kN thrust each at 375 sec vacuum specific impulse, and two sea level engines of 1700 kN thrust

each, and specific impulses of 356 sec

in vacuum and 330 sec at sea level. Exit

diameters are 1.3 m and 2.4 m for the sea level and vacuum forms, respectively.

(I did not correct sea level thrust to vacuum.)

I am presuming here that second stage operation during

launches to Earth orbit takes place in vacuum,

so I use the vacuum thrust data for both versions of the engine. Each type’s thrust is therefore associated

with a propellant flow rate via its specific impulse. Summing these gets a total full thrust and a

total propellant flow, and thus an

effective “average” vacuum specific impulse with all six engines running, for an effective exhaust velocity of about

3.5762 km/sec. That calculation

summarizes as follows, where effective

cluster specific impulse is total thrust divided by total flow rate (Figure 2).

Now, on the

assumption that both forms of the vehicle have the same ascent propellant tanks

and quantities (totaling 1100 metric tons of propellants), the following weight statement and delta-vee

table applies (Figure 3). For the

tanker, the first-listed payload of 150

tons is assumed from the cargo passenger version. The second is back-calculated from holding

tanker delta-vee capability to be the same as the heavier ascent form of the

cargo/passenger vehicle.

To do that, one finds

the required mass ratio from the delta-vee,

then solves the mass ratio build-up for the unknown payload:

Wpay =

[Wp – (MR – 1)Wdry] / (MR – 1)

What I find very interesting here is that Spacex seems to

have said it takes 6 tankers to fully refill an ITS on orbit for its voyage to

destination. If you look at the heavier

tanker that gets the same 6.2 km/sec delta-vee as the fully-loaded

cargo/passenger form, then 1100 metric

tons of propellant divided by an estimated 184.7 metric tons per tanker equals

5.956 (almost exactly 6) tankers required.

So the tanker at 50 tons dry weight seems to hold 1100 tons of ascent

propellant, and just about 185 more tons

of propellant-as-payload with which to refill a cargo/passenger ITS on orbit. It would appear this estimate is then just about

right. It does presume all 6 engines

running all of the time.

Using BFR/ITR at Mars

For a trip to Mars from low Earth orbit, the departure delta-vee for a Hohmann

minimum-energy orbit to Mars is around 3.71 km/sec at average orbital

conditions. For a direct entry without

stopping in Mars orbit, you let the

planet hit you from behind, as the

planet’s orbital velocity is faster than the transfer orbit’s aphelion

speed. Velocity at entry interface will

fall in the 6 km/sec range, and

aerodynamic drag kills most of that to about 0.7 km/s coming out of hypersonics

fairly deep in the Martian atmosphere.

Double or triple that for the landing burn: about 1.5-to-2 km/sec delta-vee requirement.

That’s crudely 5.21 to 5.71 km/sec delta-vee required to

make a direct landing on Mars, with just

almost 6.2 km/sec available. The

difference can be used to fly a somewhat higher-energy transfer orbit, for a shorter flight time than 8 months. Faster is possible if payload is reduced.

To return, the ITS is

refilled with in-situ propellant production on Mars. It will need around 6 km/sec delta-vee

capability to launch and escape directly, with enough energy to achieve the return

transfer orbit. We assume a direct entry

at Earth, which means in turn we run into

the planet from behind, since vehicle

perihelion velocity is higher than Earth’s orbital velocity.

It will be a very demanding entry interface speed (well

above 11 km/sec): this is what stresses

the heat shield, not entry at Mars. But,

the vehicle will come out of hypersonics at about the same 0.7 km/sec

moderately high in the atmosphere. It

will need at least 3 times that as the landing burn delta vee requirement, because the altitude is higher, and the gravity is stronger. Call it 2 km/sec as a “nice round number” to

assume.

The total delta-vee requirement to ascend from Mar’s surface

and achieve a direct transfer orbit and a powered landing on Earth is therefore

in the neighborhood of 8 km/sec. That is

just about what the ITS cargo/passenger version seems capable of, if restricted to about 50 metric tons return

payload. Again, that particular payload correspondence lends

confidence to these otherwise-guessed numbers.

It also points out how critical in-situ propellant

production will be for using this vehicle on Mars. Unless this vehicle is refilled locally with

the full 1100 metric ton propellant load,

it is stranded there! Each launch

from Mars requires 240 metric tons of locally-produced liquid methane, and 860 metric tons of locally-produced

liquid oxygen. Launch opportunities are 26

months apart. Required production rates

are thus 9.23 tons/month methane, and

33.08 tons/month oxygen, at a bare

minimum, per launch.

BFR/ITS For the Moon

Some have pointed out that this vehicle could also visit the

moon. To leave Earth orbit for the

moon, the delta-vee requirement about

3.29 km/sec. The delta-vee to arrive

into low lunar orbit is just about 0.8 km/sec,

or to land direct, about 2.5

km/sec. Those one-way totals are 4.09

km/sec to lunar orbit, and 5.79 km/sec

to land direct (remarkably close to the Mars value at min energy transfer).

To return by a direct departure from the lunar surface

requires about 2.5 km/s, or from orbit about

0.8 km/sec. Landing at Earth is largely

by aerodynamic braking, but requires

about a 2 km/sec landing burn.

Therefore, total delta-vee

requirements to return are 4.5 km/sec from the surface, or 2.8 km/sec from lunar orbit.

One could conclude that the ITS could ferry cargo to lunar

orbit and return entirely unrefilled, a

trip requiring total 6.89 km/sec delta-vee capability. This is not available at 150 metric tons of

payload, but it is available at

something a little larger than 50 tons. I get about 102 metric tons of payload.

The requirements to land and return entirely unrefilled

would be 10.29 km/sec, which is

out-of-reach even at only 50 tons payload.

To use the ITS on the lunar surface will require propellant production

on the moon, although likely at somewhat

lower rates and quantities than at Mars.

Guessing Reusable Performance of BFR

A related point: if

we presume the fully-loaded ITS uses essentially all of its 1100 tons of

propellant achieving low Earth orbit, we

can back-estimate the delta-vee that is actually available from its BFR first stage, even allowing for flyback. Earth orbit velocity is just about 8.0

km/sec. Allowing 5-10% gravity and drag

losses for a vertical ballistic trajectory,

the min total delta vee is about 8.4-8.8 km/sec. About 6.1 of that is from the ITS second

stage. The first stage need only supply

2.3-2.7 km/sec, which means the staging

velocity is just exoatmospheric at around 2.5 km/sec. It should easily be capable of ~5

km/sec, so the difference is for flyback

all the way to launch site, and

propulsive landing.

Suborbital Intercontinental Travel

Finally, there has

been some excited talk about using the BFR/ITS for suborbital high speed

transportation across intercontinental ranges here on Earth. That is a ballistic requirement similar to

that of an ICBM. The burnout velocity of

the typical ICBM is around 6.7 km/s. Allowing

5-10% margin for gravity and drag losses,

the delta-vee necessary to fly intercontinentally is 7 to 7.3

km/sec, plus for the ITS, about 2 km/sec for the landing burn. Total is thus 9 to 9.3 km/sec delta-vee.

This is way beyond the delta-vee capability of the ITS stage

alone, notwithstanding the fact that 4

of its 6 engines will not operate at sea level,

and even if they did, total 6-engine

thrust of the ITS stage (1100 kN) is less than its weight (1300 kN or more). But this delta-vee is within reach of the

two-stage BFR/ITS combination (6.2 to 7.9 km/sec ITS and ~2.5 km/sec BFR for

8.7 to 10.4 km/sec), and likely with a

little less payload than the 150 tons typical to Mars. Maybe something in the vicinity of 100 tons.

Final Remarks

These estimates are rough. I did not correct sea level thrust to vacuum for one thing, my delta vee requirements are approximate for another, and I did not explore the effects of using only the vacuum engines for higher specific impulse out in space.

Even so, these results are very intriguing. These calculations were made pencil-and-paper with a calculator. Nothing sophisticated.

Monday, October 16, 2017

ASUS Hardware, Windows Software? Never Again!

My ASUS X553M laptop with factory Windows 10 operating

system is a low-quality, unreliable piece

of crap! So is its operating system! (Its predecessor was a Toshiba laptop running

Windows 8/8.1. The hardware failed at

age 2: the display hinges broke. I hated Windows 8 from the moment I saw it.)

This ASUS machine/Windows software combination has several very

serious issues that Best Buy’s Geek Squad cannot, or will not, help me with.

All these major issues are fatal,

as far as my estimate of quality is concerned. That list follows below.

I would appreciate comments from readers as to what machines

or operating systems might possibly be acceptable (since this machine and

operating system are so very clearly not).

I need to do word processing, powerpoint-type slides, spreadsheet work with plotting, and a shell within which to run old-time DOS

software. I need something that can use

wi-fi to access the internet and email. I

want a battery pack that I can pull, to

force a restart, when all else fails.

ASUS X553M / Windows 10 Fatal Issues List:

#1. The screen dims and flashes or flickers, when not plugged into the AC power

supply. This renders the machine

unusable, in spite of the battery being

charged. When the issue first

started, it did this with about 50%

battery charge remaining, as indicated

on the display. This rapidly got worse over

a period of only months, accelerated to

starting the flicker at 90% battery indicated.

Now it will not run without flashing even at 100% indicated charge

state. Nothing in the Windows settings

affects this.

#2. The machine turns off its wi-fi device

spontaneously, without warning, and for no perceptible reason. This happens erratically and

unpredictably. The frequency with which

it occurs is increasing as time goes by.

More of the time, It still sees

the wi-fi network, and will reconnect if

you command it. But for a significant

portion of the time, it does not see the

wi-fi network, and so cannot be

commanded to reconnect. The only

recourse in that case is reboot.

#3. This machine on occasion locks up without warning, rendering the keyboard and the mouse totally

inoperative. The only way to deal with

this is a reboot. It always loses all

data up to the last save.

#4. I cannot trust

the reboot to be effective, unless I

unplug the AC power, and either select full

shutdown (not restart), or else use the

power switch. I have noticed that the

tiny indicator lights do not go out, and

that the issues the reboot was supposed to correct do not reliably get

corrected, unless I go for the complete

shutdown with no AC connected. There is

no battery pack to pull, as the battery

is all-internal.

#5. The machine

erratically and unpredictably ignores clicks of the mouse. This problem comes and goes erratically.

#6. The keyboard has

unreliable keys, and a slow response to

keystrokes. You can type fast, and it will miss a lot of letters. Some are worse than others. Those will often ignore slow repeated

keystrokes, even ignore continuous

hold-down of the offending key. Plus, the symbols wore off the keys in only a year.

#7. I haven’t seen a

stable operating system out of Microsoft since DOS, which would fit on a 1 megabyte floppy disk. The entire fundamental Windows concept is

flawed, forcing people to learn a second

language (icons), which was (and still

is) unnecessary. The last DOS machine I

had also had a little shell program (from a German company) that did a

text-based point-and-click mouse controlled interface. This interface did everything for file

navigation that Windows ever did, but

would fit on another 1 megabyte floppy disk without even filling it.

#8. Windows 8/8.1/10 are all useless pieces of crap totally

bogged down with useless touch-screen crap that is totally inappropriate to an

ordinary laptop. That kind of marketing

arrogance totally negates any possible past reputation Microsoft ever had for

quality or for customer service.

#9. All of the Windows operating systems are very hard-to-remove

(you must wipe the hard drive), behaving

exactly like a virus or malware, ever

since Windows 95. The last semi-stable

version I had was Windows 3.1, but it

was nowhere near as stable as DOS 2 or DOS 6,

which never corrupted themselves or required reboots.

#10. The Windows

operating systems are all self-corrupting,

and they do not clean up the messes they make, which clog up your hard drive memory, and bog down your machine’s operating speed. DOS did not do that.

Monday, October 2, 2017

Machine Guns in Las Vegas?

Update 10-3-17: in red text below.

Update 10-4-17: in blue text below.

Update 10-6-17: in purple text below.

Under federal law, a “machine gun” is a firearm that shoots more than one bullet per trigger pull. The synonym for this is “fully-automatic”. A “semi-automatic” weapon is one that sends one bullet per trigger pull, loading the next round automatically. If it doesn’t load the next round automatically, that means the user must operate some sort of manual bolt or other mechanism to load the next round. Bolt-action rifles, pump or breakdown shotguns, and ordinary revolver handguns fall into that last category.

Update 10-4-17: in blue text below.

Update 10-6-17: in purple text below.

Under federal law, a “machine gun” is a firearm that shoots more than one bullet per trigger pull. The synonym for this is “fully-automatic”. A “semi-automatic” weapon is one that sends one bullet per trigger pull, loading the next round automatically. If it doesn’t load the next round automatically, that means the user must operate some sort of manual bolt or other mechanism to load the next round. Bolt-action rifles, pump or breakdown shotguns, and ordinary revolver handguns fall into that last category.

The M-16 used by US armed forces is indeed a machine

gun, a fully-automatic weapon, although it can be operated as a semi-automatic

single-shot weapon as well. The same is

true of the Russian-developed Kalashnikov AK-47. These are true “assault weapons” for military

use precisely because they really can be machine guns. A military unit not so armed is at a lethally-distinct

firepower disadvantage when confronted by such weapons.

The AR-15 (and most modern hunting and sport guns) is a

semi-automatic weapon, not a machine gun

/ fully-automatic weapon. The fact that

an AR-15 looks exactly like an M-16, has

absolutely nothing to do with its rate of fire. Calling it an “assault weapon” is

wrong, because no military unit today would

ever go into combat with the AR-15. They

would be totally outgunned by any group with fully-automatic weapons. It’s not about what the gun looks like, it is entirely about what the gun can

actually do. Simple common sense.

Civilians in this country currently can indeed own or

possess machine guns, but what devices they

can own, and what they can do with them,

is very,

very, very severely

restricted. This began with the National

Firearms Act (NFA) of 1934. That law came

about because the mafia was causing mass death in the streets with the

venerable old “Tommy gun”, which really

was a machine gun. It severely

restricted civilian ownership of fully automatic weapons, short-barrel rifles and shotguns, and certain explosives. It was amended in 1968 and again in 1986.

The 1986 amendment restricted civilian ownership of fully

automatic weapons to only those made before 1986, only with payment of a $200 tax along with an

enormous and very invasive application,

and only with a very, very

thorough ATF background investigation,

plus requirements for notification of the ATF any time the owner

traveled with any of those devices.

Such devices could not be updated or repaired with modern

parts. Parts for such devices are

largely out-of-reach of all but the richest today. There are no exceptions to allow for the

ownership of anything newer than 1986.

There are no exceptions to any of the other requirements.

This status was superseded for a while in 1994 to disallow entirely

the civilian ownership of those pre-1986 machine guns, short-barrel guns, and devices,

but that restriction expired in 2004.

So, we are still under the 1986

version of the law today.

In all 50 states, it

may indeed be legal to own machine guns,

but only in accordance with the federal law! If the possession or use is not in accord

with federal law, then such possession

or use is presumed illegal under state law,

period! Some states impose

further restrictions, some do not. And that federal law is exactly the 1986

update of the 1934 NFA law. Period. No exceptions.

Modifying a semi-automatic weapon into a full-automatic

weapon is indeed possible, but it is

generally not very easy to do. It

requires appropriate tools and knowledge and experience. It also requires testing. This is already illegal under any

circumstances, no exceptions.

Update 10-4-17: Two new technologies for increasing firing rate have come to light. These are the "bump stock" and the "gat-crank". These act to increase the firing rate of a semi-automatic weapon to that of a fully-automatic weapon, without modifying the loading mechanism inside the weapon. These are therefore technically legal, but they definitely do violate the intent of the 1986 prohibition on all but grandfathered machine guns. In my opinion, this is cheating, and should not be allowed.

Update 10-4-17: Two new technologies for increasing firing rate have come to light. These are the "bump stock" and the "gat-crank". These act to increase the firing rate of a semi-automatic weapon to that of a fully-automatic weapon, without modifying the loading mechanism inside the weapon. These are therefore technically legal, but they definitely do violate the intent of the 1986 prohibition on all but grandfathered machine guns. In my opinion, this is cheating, and should not be allowed.

What the shooter in Las Vegas did, and what motivated him, are still the subjects of investigation. Nothing is yet known with any certainty, and such certainty is unlikely for quite a

while yet. Update 10-6-17: information in news reports keeps surfacing that point to mental illness of some kind in this shooter. He got his guns legally, because no judge ever had him committed. If you look at the earlier article cited below, that "leak" of guns into the hands of crazies is the most common cause of these mass shooting incidents!

The best speculations are (1) he sneaked some 10 (weapon count has been climbing in subsequent reports, both in the hotel and at his home) long-barrel

weapons into his hotel room overlooking the outdoor concert venue, (2) at least some of those weapons were

machine guns based on the high rates of fire evident from the audio recordings

of the event, and (3) he fired into a

dense crowd that could not move quickly, so that without aiming, he was certain to hit lots of people.

Item 3 means that fully-automatic weapons are not required

to exact a huge death toll, but they do

considerably raise it. Not even

semi-automatic weapons are needed. A

considerable death toll could still be expected with just single-shot, bolt-action rifles. So,

it’s not really about the gun,

it’s much more about the situation:

a densely-packed, immobile crowd

as the target from a nearby high place.

Every time there is such a mass shooting event, there is an immediate knee-jerk reaction: a call for tighter gun control. Always the same things are proposed, and almost none of them would have prevented

any of these events, including this one! The exceptions are (1) selling weapons too

easily to crazy folks, and (2) loopholes

to the required background checks we already have.

The problem here really isn’t so much the guns, it is what motivates people to want to kill

their neighbors. What causes that? I have never heard a good answer to that

question. Maybe it is past time to go

find out.

Update 10-3-17: To find out what the gun violence is really trying to tell us, go see my analysis of excerpts from the Mother Jones gun violence database. It is not what you think! This analysis is in the article titled "What the Gun Violence Data Really Say" dated 6-21-2016 on this website. It has a list of titles and dates for other articles I have also written on this subject. The navigation tool on the left gets you there most easily. Click on the year, then on the month, then on the title.

For those unwilling to go to the cited article and examine the data for themselves, here is the short form of the message: (1) we have a major "leak" of guns legally sold to people who are mentally ill, but have never been so ruled by a court, (2) we have a major problem with inadequately-defended (or entirely-undefended) gun-free zones, which also invite terrorist attack, and (3) the "usual" gun control proposals of "assault" weapons bans, clip size limits, and the like, have already been tried and were already found to be ineffective.

It's both that simple and that ugly. Fix those two items properly, and it looks to me like most of this problem goes away. Item 3 tells you what not to do. Update 10-4-17: I also recommend outlawing "bump stocks" and "gat-cranks". That won't prevent the incidents, but it will reduce the death tolls.

Update 10-3-17: To find out what the gun violence is really trying to tell us, go see my analysis of excerpts from the Mother Jones gun violence database. It is not what you think! This analysis is in the article titled "What the Gun Violence Data Really Say" dated 6-21-2016 on this website. It has a list of titles and dates for other articles I have also written on this subject. The navigation tool on the left gets you there most easily. Click on the year, then on the month, then on the title.

For those unwilling to go to the cited article and examine the data for themselves, here is the short form of the message: (1) we have a major "leak" of guns legally sold to people who are mentally ill, but have never been so ruled by a court, (2) we have a major problem with inadequately-defended (or entirely-undefended) gun-free zones, which also invite terrorist attack, and (3) the "usual" gun control proposals of "assault" weapons bans, clip size limits, and the like, have already been tried and were already found to be ineffective.

It's both that simple and that ugly. Fix those two items properly, and it looks to me like most of this problem goes away. Item 3 tells you what not to do. Update 10-4-17: I also recommend outlawing "bump stocks" and "gat-cranks". That won't prevent the incidents, but it will reduce the death tolls.

Saturday, September 23, 2017

Why So Many Illegal Immigrants?

Depending upon whom you believe, there are some 10 to 12 million illegal

immigrants in this country. Why? (I’ll

warn you ahead of time: you won’t like

the real answer.)

Short form:

This traces directly to inaction by Congress since the end of WW2, when they ended the Bracero program with the

mass deportation of Mexican agricultural workers.

Long form follows:

There are H1A visas for technical people, and there are H2A and H2B visas for unskilled

labor, intended to be work permits for mostly-Mexican

laborers. These H2A and H2B visas cover

the laborers, plus the dependents they

bring with them. H2A visas are specifically

for migrant farm workers, and H2B visas are

for all the other migrant worker trades,

such as the truly grungy stuff at construction sites, concrete work, road work,

lawn care guys, and toilet

cleaners at motels, etc.

Because these jobs are both low-paid, and very hard and unpleasant work, almost no Americans really try to apply for

such jobs, despite what some

claim. We are awash in fake news about

this issue, among many others. You can recognize a fake news echo chamber by

the lack of divergent opinions, it

really is that simple.

The low pay for the immigrant workers is a vicious

cycle: because most of the workers are

illegally here, their employers simply extort

labor at very low pay from them. This is

immoral and unethical, but VERY

widespread. If these workers were

legal, pay for that work would have to rise, and more Americans might even apply for such

jobs.

These workers are a huge factor in our economy: reportedly around 15% of construction

jobs, and apparently almost all the crop

harvesters we have ever had since WW2. If

you deport them all, important sectors

of our economy not only crash, but you

will go hungry because of high-priced foreign food imports. Now that's the real facts, unpleasant though they are.

Why are these workers mostly illegal and thus subject to extortion

into wage slavery? Because the worker permit visa

quotas controlled by Congress are completely out-of-line with the "ground

truth" of our economy. The

demand and corresponding need is there,

the accommodation is not.

According to the Brookings Institute, the annual cap on H2A and H2B visas totals to

about 125-150,000. That's roughly a factor-of-100

out-of-balance with reality: the

10 to 12 million that are here doing the work,

and paying taxes on their meager wages, despite what some say.

What no one wants to hear (but the painful truth will set

you free, when political lies won’t): we brought this on ourselves; more specifically, our Congress did, with over 7 decades inaction on this issue. That is utterly inexcusable.

Worse, some of them

run for re-election promising to do the wrong thing about this problem! But we keep electing and re-electing all the

idiots that did this!

So, stop re-electing

them! Elect instead somebody who will

really fix this, by actually doing

something about the out-of-balance visa quota system. You’ll see this problem melt away in a very

few years, if this imbalance is

corrected.

(And by the way, fixing this permanently fixes the DACA problem, as well.)

Sunday, August 13, 2017

North Korea Has Come to a Head

Note: this article appeared in a slightly shorter form as a guest column on the opinion page of the Waco "Tribune-Herald", Sunday 8-13-17.

Update 8-19-17: I have appended some very specific recommendations for what to do about this problem at the end of this article.

Update 8-19-17: Appended Specific Recommendations:

(end update 8-19-17)

Update 8-23-17: the same nuclear bunker-buster I suggested for decapitating the North Korean regime, would work against the underground hardened nuclear production facilities in Iran. That need would arise if they choose to violate the agreement and start building bombs (a real risk). I repeat: do we have such a weapon? If not, why not?

Update 9-19-17: After thinking about it for a while, I believe the real reason Kim Jong Un wants nuclear weapons is to extort the reunification of Korea on his terms. The threat of nuclear attack "wherever" is the threat by which to ward off the counter-invasion that topples his regime. I still say we do this by standoff strike, not invasion. We leave the failed state on China's doorstep to clean up. It's only fair, they created this abortion.

Update 8-19-17: I have appended some very specific recommendations for what to do about this problem at the end of this article.

The North Korea atomic weapons crisis has come to a

head. Understanding this situation is a

whole lot easier than many think. Like a

boil, it must be lanced.

They now have the 4 elements needed to present a credible

nuclear missile threat to the US and many other nations. Those are a big-enough rocket, a nuclear warhead small enough to ride that

rocket, a guidance system to get it near

its target, and a heat shield for the

warhead to survive reentry.

The recent high-arcing rocket tests demonstrate they have

made sufficient progress on all four fronts.

The trajectory shows the capability of hitting the US if aimed

differently, the intelligence

communities agree they have a bomb small enough to ride that particular

rocket, and the fact that these test

flights have not gone astray shows that the guidance works. “Something” from these rockets have

been tracked to impact from these tests,

which very strongly suggests that the heat shield works.

Whether this ICBM is actually reliable is beside the point, same as it was with ours and Russia’s in the

late 1950’s. If they launched

several, at least a few would get to the

target. Now that he has a credible weapon, Kim Jong Un is ready to play the age-old

blackmail game. This is a pattern known

across millennia of history, but most

folks would recognize the name Adolf Hitler.

The game is played thusly:

the aggressive one makes a threat to do something “unspeakable” unless

he gets what he wants. He must be

willing to risk getting slapped down for it,

but throughout history, most of

those who are willing to make the threat, have been willing to take that risk.

Between the World Wars,

that “unspeakable” threat was to wage war at all, based on the horrifying experiences of World

War 1. Since World War 2, the “unspeakable” threat has been to wage

nuclear war. Notice how lots of

conventional wars have been waged since then?

Only the technology deemed “unspeakable” has changed. The game remains the same.

Kim Jong Un may seem crazy to us, but he is crazy like a fox. It is not yet clear what he wants, but he has already made the threat to nuke

Guam. His risk bet is that we won’t actually

go to war over an island far from our shores.

That is why he has not yet threatened the lower 48 states.

But as this escalates,

Hawaii and Alaska are at risk,

because of US military assets in both places, plus our allies in the region. Eventually,

he would attack the lower 48 as a final act of desperation. We’ve seen this pattern many times before.

And escalate it will!

Just like with Hitler and the Nazis in 1930’s Europe. This scenario has played out countless times

over history. Kim Jong Un is following a

long-established pattern like it was a cooking recipe. This is perfectly predictable.

Of course, there is

no excuse not to pursue a diplomatic solution.

Basic humanity on our part demands it.

But, don’t hold your breath for

it to work! It didn’t work with

Hitler, or his predecessors.

What worked was raw naked force. The only question is how much you have to use, and that increases as time goes by. This is very much like a boil: the longer you let it fester, the more it hurts when you lance it, and more damage there is to heal afterwards.

It is very important that we not

strike the first blow, and that would be

true, even without any pronouncements

from the Chinese as to whether they get involved or stay neutral. It is also important that we not resort to

half measures, such as only striking

test sites.

This is the main lesson of World War

2: you go “whole hawg or none”. If North Korea strikes Guam or anywhere

else, we take out Kim Jong Un and his

entire government. Regime change or

nothing. Period.

It would be nice if we could kill Kim Jong Un and all his

government functionaries by destroying them in their big government complex in

Pyongyang, without killing all the

civilians in the surrounding city. Then

there’s no need to send one tank or one soldier across the border, or to commit genocide by nuking the city.

The size of that complex demands that we use a deep-penetrating

“bunker-buster” projectile fitted with a small nuclear warhead, exploded deep underground, and turning the complex into a contained

rubble pile in a pit, too radioactive to

enter. For the most part, the city and the people survive, only Kim Jong Un and his government die.

But I haven’t ever heard that we actually have such a

weapon! North Korea has been festering

since 1953, so it’s not like we haven’t

foreseen this problem coming. This lack

for so long a time makes me think we have spent an awful lot of money on the

wrong weapons, not the ones we really

needed.

Think about THAT the next time you go vote. Which is now too late to do anything about

any of this.

Meanwhile, sleep

tight!

Update 8-19-17: Appended Specific Recommendations:

Specific

Recommendations Regarding North Korea

First, privately among ourselves, we must agree upon three things:

(1) We will put an end to the regime if they launch any sort

of weapon at any US territory or ally,

anywhere in the world.

(2) We will accomplish this from a distance: no invasion,

no occupation.

(3) We would like to do this with minimal loss of civilian

life on all sides, but accomplishing an

end to that ugly regime is higher priority than saving those lives.

Second, we tell North Korea publicly that “we will

put a permanent end to their regime if they launch any weapon toward any US

territory or ally, anywhere in the world”. This should be calm, quiet,

succinct, and very much to the

point. No questions, no discussion. No bluster.

Just that fact.

Third, we tell China very privately that we will put

an end to the North Korean regime because they did not control the rogue regime

that they created. We tell them we will

not invade or occupy, because that is

not in our interests. It was in their

interests to control what they created,

but they did not do their job.

Our action will inevitably leave a failed state on their

doorstep, something neither of us

wanted. But because of them not doing their

job, it is only fair that they clean up

the failed state mess that we leave for them.

No questions, no discussion. Not negotiable. Best for them and for us.

Fourth, among ourselves, and probably in a deeply-classified information

scenario, we must address exactly how we

will utterly destroy that regime from a standoff distance, both “right now”, and within the next year or so. There will be no invasion (not even

temporarily), no occupation. It is best to do this without even sending

manned aircraft.

We do this with standoff weapons, and preferably not ICBM’s, which could be mistaken for an attack on

China. Tactical (not strategic) weapon

trajectories are an imperative here.

The goal is to suddenly destroy the entire governmental complex

in Pyongyang, in a completely-surprise

attack, at a time we choose, not just an immediate knee-jerk

response. The hope is to catch Kim Jong

Un and his top staff there, and kill

them all in the sudden utter destruction of that complex. If we miss him, then we target other installations where he

might be, in a similar fashion. We keep up the strikes until we get him, no matter how long it takes. Then we quit.

A tactical missile with a nuclear warhead can do that job

right now, but with enormous civilian

casualties and the destruction of much of the city. That outcome would resemble Hiroshima and

Nagasaki, so it is imperative that they

strike first, no if’s, and’s,

or but’s about that. Such a

weapon could be launched from Japan,

South Korea, or a ship (or

submarine) at sea close by.

However, some sort of

tactical missile might possibly be fitted with a deep-penetrating

“bunker-buster” nuclear warhead. It

would likely be a larger tactical missile due to the weight of the Earth penetrator

and the necessary speed at impact.

Such a strike would excavate out a cavity under the

foundations of the government complex,

shatter that complex into rubble,

and contain that radioactive rubble by its collapse into the excavation

pit. There would be some surface

fallout, but not nearly as much as the usual

“city-busting” scenario. In this underground

nuclear scenario, most of Pyongyang and

its civilian population would survive in good shape. That is the preferred scenario.

The questions we must ask ourselves in this private, classified discussion are two-fold.

(1) Do we possess such a weapon? If yes,

we’re “good-to-go” immediately.

(2) If not, how soon

could we have one? And then get on with

it as a “crash program”. Speed is

crucial.

Finally, I would add that this crisis has been long

foreseen. If we have no such suitable weapon

to end it with minimal civilian casualties,

why is that? How do we fix that management

lack?

(end update 8-19-17)

Update 8-23-17: the same nuclear bunker-buster I suggested for decapitating the North Korean regime, would work against the underground hardened nuclear production facilities in Iran. That need would arise if they choose to violate the agreement and start building bombs (a real risk). I repeat: do we have such a weapon? If not, why not?

Update 9-19-17: After thinking about it for a while, I believe the real reason Kim Jong Un wants nuclear weapons is to extort the reunification of Korea on his terms. The threat of nuclear attack "wherever" is the threat by which to ward off the counter-invasion that topples his regime. I still say we do this by standoff strike, not invasion. We leave the failed state on China's doorstep to clean up. It's only fair, they created this abortion.

Previous Related Articles:

date, search keywords

"article title"

4-8-17, current events, Mideast threats, North Korean

rocket test

"The Time Has Come to Deal with Iran and North Korea"

4-6-09, North Korean rocket test

"article title"

4-8-17, current events, Mideast threats, North Korean

rocket test

"The Time Has Come to Deal with Iran and North Korea"

4-6-09, North Korean rocket test

"Thoughts on the North Korean Rocket Test And Beyond"

12-13-12, current events, North Korean rocket test

"On the 12-12-12 North Korean Satellite Launch"

2-15-13, Mideast threats, North Korean rocket test

"Third North Korean Nuclear Test"

4-5-13, current events, North Korean rocket test

"North Korean Threat Overblown, So Far"

"North Korean Threat Overblown, So Far"

9-12-15, bad government, bad manners, current

events, idiocy in politics, Mideast threats

"Iran Nuclear Deal Nonsense"

events, idiocy in politics, Mideast threats

"Iran Nuclear Deal Nonsense"

Tuesday, July 4, 2017

Heat Protection is the Key to Hypersonic Flight

The problem is not so much propulsion as it is heat

protection. The reason has to do with

the enormous energies of high speed flight,

and with steady-state and transient heat transfer. Any good rocket can push you to hypersonic

speeds in the atmosphere. But it is

unlikely that you will survive very long there!

The flow field around most supersonic and hypersonic objects

looks somewhat like that in Figure 1.

There is a bow shock caused by the object parting the oncoming air

stream. Then, the flow re-expands back to near streamline

direction along the side of the object.

Then it over-expands around the aft edge, having to experience another shock wave to

straighten-out its direction parallel to free stream again. This aft flow field usually also features a

wake zone of one size or another, as

shown.

The conditions along the lateral side of the object are not

all that far from free stream, in terms

of static pressures, flow

velocities, and air static

temperatures. One can compute skin heat

transfer using those free-stream values as values at the edge of the local

boundary layer, and be “in the ballpark”. That is what I do here, for illustrative and conceptual

purposes.

Once flow is supersonic,

the boundary layer behavior isn’t so simple any more. There is a phenomenon that derives from the

very high kinetic energies that one simply does not see in subsonic flow: energy conservation. The value of that kinetic energy shows up as

the air total temperature Tt,

which is the upper bound for how hot things could be. Air captured on board by any means

will be very close to Tt, if

subsonic relative to the airframe after capture. This includes any “cooling air” one might use!

In addition, there is

“viscous dissipation”, which has the

effect of raising the actual (thermodynamic) temperature of the air in a max

shearing zone within that boundary layer, to very high temperatures. The peak of this temperature increase is

called the recovery temperature Tr.

The difference between this recovery temperature and the local skin

temperature Ts is what drives air friction heat transfer to the

skin, not the difference between the air

static temperature and the skin temperature,

as is typical in subsonic flow.

See Figure 2. The temperature

rise from static to recovery is around 88 to 89% of the rise from static to

total, in turbulent flow, which this almost always is.

Most heat transfer calculations for this kind of flow regime

take the basic form and sequence illustrated in Figure 3. “How high and how fast” determines the

conditions of flow, ultimately. Total and recovery temperatures may be

computed from this, and total

temperature is conserved throughout the flow field around the object, regardless of the shock and expansion

processes. The flow alongside the

lateral skin is not far from free-stream to first order, and that may be used to find out “what

ballpark we are playing in”. Better

local edge-of-boundary layer estimates must come from far more sophisticated

analyses, such as computer fluid

dynamics (CFD) codes.

In Figure 3, the

process starts by determining recovery temperature. The velocity,

density, and viscosity at the

edge of the boundary layer won’t be vastly different from free stream, unless you are really hypersonic, or really blunt (detached bow shock). The various correlations account for this.

Using whatever dimension is appropriate for the selected

heat transfer correlation, one computes

Reynolds number Re. Low densities at

high altitude lead to low values, and

vice versa. High speeds lead to high

values. Different correlations have the

density and viscosity (and thermal conductivity) evaluated in different ways

and at different reference temperatures.

You simply follow the procedure for the correlation you selected. Sometimes this is neither simple, nor straightforward.

The complexity of these correlations varies. My favored lateral skin correlations use a T*

for properties evaluation that is T* = mean film plus 22% of the stagnation

rise above static. My favored slower

than reentry stagnation zone correlation evaluates fluid properties at total

conditions behind a normal shock. In the

stagnation case, Reynolds number is

based on the pre-shock freestream velocity.

The next step is the correlation for Nusselt number Nu. This nearly always takes the form of a power

function of Re (plus some other nontrivial factors), usually with an exponent in the vicinity of

0.8 or so. Nusselt number is then

converted to heat transfer coefficient h,

using the appropriate dimension and the appropriately-evaluated thermal

conductivity of the air, for the

selected correlation.

The heat transfer rate is then as given in Figure 3, which shows the Tr – Ts

temperature difference.

One should note

that because both density (which is in Re) and thermal conductivity k (which is

in h) are low at high altitudes, the

computed values of h will be substantially smaller at high altitudes in the

thin air. High speeds act to raise

h, and to very dramatically raise Tr

and Tt. That last effect is

truly exponential.

Having the heat transfer rate is only part of the

problem. One must also worry about

transient vs steady-state effects. If

the skin is completely uncooled in any way,

it is then only a heat sink of finite capacity, with the convective input from Q/Aconv

= h (Tr - Ts). One

can use material masses and specific heats to estimate the heat that is sinkable

as skin temperature rises. The highest

it can reach is Tr = Ts,

where it is fully “soaked out” to the recovery temperature. That zeroes heat transfer to the skin.

The time it takes to soak out can be very crudely

estimated as 3 “time constants”,

where one “time constant” is the heat energy absorbed to soak-out all

the way from initial Ts to Tr, divided by the initial heat transfer rate

when the skin is at the initial low Ts.

More complex steady-state situations must find the

equilibriating Ts when there is convective input from air

friction, conductive/convective heat

transfer into the interior of the object (something not illustrated here), and re-radiation from the hot skin to the

environment. In high speed entry, there is also a radiative input to the skin

from the boundary layer itself, which is

an incandescent plasma at such speeds, and

this is very significant above about 10 km/s speeds.

Not covered here in the first two estimates are heat transfer correlations

for nose tips and leading edges.

Those heat transfer coefficients tend to be about an order of magnitude

higher than the coefficients one would estimate for “typical” lateral

skin. Stagnation soak-out temperatures

should really be nearer Ttot than Tr, although those temperatures are really very

little different.

Suffice it to say here that if one flies for hours instead

of scant minutes or seconds with uncooled skins, they will soak out rather close to the

recovery temperature Tr or total temperature Ttot. That brings up practical material

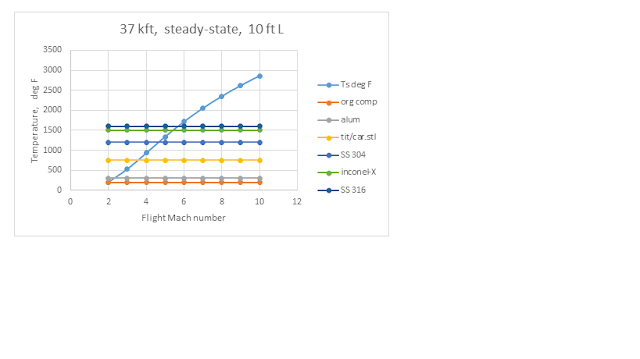

temperature limits. See Figures 4

and 5.

For almost all organic composites, the matrix degrades to structural uselessness

by the time it reaches around 200 F. The

fiber might (or might not) be good for more,

but without a matrix, it is

useless. For most aluminum alloys, structural strength has degraded to under 25%

of normal by the time it reaches about 300 F,

which is why no supersonic aircraft made of aluminum flies faster than

Mach 2 to 2.3 in the stratosphere, and

slower still at sea level. Dash speeds

higher are limited to several seconds.

Carbon steels and titaniums respond to temperature very similarly, it is a very serious mistake to think that

titanium is a higher-temperature material than carbon steel! Titanium is only lighter than

steel. And you “buy” that weight savings

at the cost of far less formability potential with titanium. Both materials are pretty-much structurally

“junk” beyond about 750 F. Various

stainless alloys have max recommended use temperatures between 1200 and 1600

F. Inconel is similar to the higher end at

about 1500 F. There are a very few “superalloys”

that can be used to about 2000 F, give

or take 100 F.

Figure 4 compares steady-state recovery (max soak-out) and

total temperatures to material limitations on a standard day at sea level. Max speed for organic composites are barely

over Mach 1, and just under Mach 2 with

aluminum. Steel and titanium are only

good to about Mach 2.5, unless cooled in

some way. Stainless steels can get you

to about Mach 3.5-to-4, the superalloys

not much higher.

Figure 4 – Compare Tt and Tr to

Material Limitations at Sea Level

Figure 5 -- Compare Tt and Tr to Material Limitations in the Stratosphere

One should note that stratospheric temperatures are only

-69.7 F from about 36,000 feet altitude to about 66,000 feet altitude. Above 66,000 feet, air temperatures rise again, to values intermediate between these two

figures! That lowers the speed

limitation some, for altitudes above

66,000 feet.

This steady-state soak-out temperature comparison neatly

explains why most ramjet missile designs (usually featuring shiny or

white-painted bare alloy stainless steel skin) have been limited to about Mach

4 in the stratosphere, and around Mach 3.3

or so at sea level. Those limitations on

speed are pretty close to the 1200 F isotherms of total or recovery

temperature. Without re-radiation

cooling, the skins soak out fairly

quickly (the leading edges and nose tips extremely quickly).

To fly faster will require cooled skins, or one-shot ablatives, or else the briefest episodes (scant seconds)

of transient flight. The

nose-tip and leading edge problem is even worse! That means for long-duration / long-range flight, the skin must be cooled, or else coated with a thick, heavy,

one-shot ablative. There

are two (and only two) ways to do cooling:

(1) backside heat removal, and

(2) re-radiation to the environment. Or

both!

Backside heat removal must address (1) conduction through

the materials, (2) some means of

removing the heat from the backside of the materials, and (3) some means of storing or disposing of

all the collected heat (what usually gets forgotten). Liquid backside cooling using the fuel comes

to mind, with the heat dumped in the

fuel tank. However, there are two very severe limits: (1) the liquid cooling materials and media

may not exceed the boiling temperature at tolerable pressures, and (2) the heat capacity of the fuel in the

tank is very finite, and decreasing

rapidly as the vehicle burns off its fuel load.

Re-radiation to the environment requires a very “black”

(highly emissive) surface coating, and

is further limited by the temperature of the environment to which the heat is

radiated. These processes follow a form

of the Stefan-Boltzmann Law, to

wit: Q/A = σ εs (Ts4

– Te4), where σ is

the Stefan-Boltzmann constant, and the εs

is spectrally-averaged material emissivity at the corresponding temperature. Subscript s refers to the hot radiating skin

panels, and subscript e refers to the

environment.

While deep space is ~4 K,

earth temperatures are nearer 300 K,

and that is what most atmospheric vehicles usually “see”. The material absorptivity is its

emissivity, which is why that value is also

used for the radiation received from the environment. A truly “black” hot metal skin might have an

emissivity near or above 0.8. This

could be achieved in some cases by a metallurgical coating or treatment, in others by a suitable black paint (usually one

of ceramic nature, and very high in

carbon content).

One More Limitation

to Consider

Once the boundary layer air is hot enough, it is no longer air, it is becoming an ionized plasma. The kinds of heat transfer calculations that

I used here become increasingly inaccurate when that happens, and other correlations developed for entry

from space need to be used instead. As a

rough rule-of-thumb, that limit is about

5000 F air temperature.

If you look at Figure 4 (sea level),

you hit the “not-air anymore” limitation starting around Mach 7. In figure 5 for coldest stratosphere, that limit gets exceeded starting around Mach

8. The only calculation methods

that “work” reliably above these limits would be CFD codes, and even then, only if the correct models and

correlations are built into the codes. That

last is not a given! “Garbage-in, garbage-out”.

That expression is no joke, it is

quite real.

With Re-Radiation

Cooling at Emissivity = 0.80

This applies only to lateral skins, not leading edges, because the heat transfer rates are an order

of magnitude higher for leading edges.

That effect alone changes the energy balance enormously.

But for lateral skins,

the speed limitation occurs when the re-radiation heat flow equals the

convective input to the skin. The

complicating factor is that convective heat transfer is a strong function of

altitude via the air density, while

re-radiation is entirely independent of altitude air density. There are now more variables at work on the

energy balance than just ambient air temperature.

That means two charts depicting the “typical” effects are

entirely inadequate. We need a sense for

how this changes with altitude air density.

What follows is a selection of equilibrium re-radiating temperature

versus speed plots, at various

altitudes, in a US 1962 Standard Day

atmosphere model. Material temperature

capabilities are superposed, as before.

Figure 11 – Lateral Skin Radiational Equilibrium at 110,000

feet

Tough Design Problem

How exactly one achieves this re-radiation cooling is quite

a difficult design problem. The skin

itself will be very hot, in order to

re-radiate effectively. Not only will it

be very structurally weak, there will be

heat leakage from it into the vehicle interior.

This is inherent, but by careful

design, can be limited to rather small (1-2%)

values compared to the energy incident and re-radiated from the outer

surface.

There must be a sufficient thickness of low density

insulation between that skin and the interior,

one capable of surviving at the skin temperature. This insulation must be some sort of mineral

fiber wool. There are no simple glasses

that survive at the temperatures of interest for hypersonic flight.

The mountings that hold the skin in place constitute

metallic conduction paths into the interior.

These must be made of serpentine shape,

of length significantly greater than the insulation thickness, in order to effectively limit heat leakage by

the metallic conduction path.

Finally, there is the

issue of sealing the structure against throughflow induced by the surface

pressure distribution relative to the pressure in the interior. Because it is much easier to design seals

that survive cold, than seals that

survive incandescently-hot, it seems

likely that the surface skins must be vented,

with the pressure distribution resisted by colder structures deeper

within the airframe.

Two Sample Cases

The SR-71 and its variants featured a “black”

titanium skin, cooled by

re-radiation, but nothing else. The leading edges (at least very locally)

would approach the soak-out temperature limits shown in Figures 4 and 5 above. Typical missions were flown at around 85,000

feet, with speeds up to, but not exceeding Mach 3.3. In the very slightly-colder air at 66,000

feet, that leading edge limit was Mach

3.5.

As figure 10 shows,

the lateral skins had a higher speed limit nearer Mach 4. So we can safely draw the rough conclusion that

the SR-71 airframe was likely limited by leading-edge heating to about Mach 3.5

or so, at something around 80,000 or

85,000 feet.

The X-15 featured skins of Inconel-X

that were radiationally very “black”. About

the max recommended material use temperature is 1500-1600 F. Leading edges might tend toward the local

soak-out limit at about Mach 4 to 4.5,

unless internally cooled by significant internal conduction toward the

lateral surfaces of a solid piece, which

these were. Thinner air “way up high”

might help with that balance, by

reducing both the stagnation, and

lateral, heating rates.

As shown in figure 11,

the re-radiation equilibrium limitation near 110,000 feet is closer to

Mach 10 for the lateral skins, and

higher still at higher altitudes, as the

other figures indicate by their trends.

The fastest flight had a white coating,

which effectively killed radiational cooling. For that,

the soak-out speed limit is closer to Mach 4.5 to 5.5, based upon figures 4 and 5.

Again, we might very crudely conclude the X-15

was limited by its leading edges to something between Mach 5 and Mach 10. The fastest flight actually flown reached

Mach 6.7, without any evident wing

leading edge or nose damage, excepting

some shock impingement heating damage in the tail section.

My Conclusions:

Most of the outfits claiming they have vehicle designs that

cruise steadily at Mach 8+ (high-hypersonic flight) have not done their thermal

protection designs yet.

That lack inherently means they do not have feasible vehicle

designs at all, since thermal protection

is the enabling item for sustained high-hypersonic flight.

“Hypersonic cruise” (meaning steady state cruise above Mach

4 or 5 for extended ranges) is therefore nothing but a buzz word, without an advanced thermal protection system

in place.

The faster the cruise speed,

the more advanced this thermal protection must be, and the more unlikely there will be a metallic solution.

Practical

Definitions:

Blunt vehicles = hypersonic Mach 3+

Sharp vehicles = hypersonic Mach 5+

Formally,

“hypersonic” is when the bow shock position relative to the vehicle

surface contour becomes insensitive to flight speed.

A Better Leading Edge

Model

That is entirely out of scope here. It might consist of one solid leading edge

piece, to be assumed isothermal. It would have a very small percentage of its

surface area calculated for stagnation heat transfer, with the remainder calculated as lateral skin

heat transfer, except as modified for

convexity into the flow near the leading edge.

There would be no conduction or convection into the interior. All surfaces would re-radiate to cool.

The next best model is a finite-element approximation, which allows for temperature variations and

internal conduction, within the leading

edge piece. Adding conduction and

convection paths into the interior is the next level of modeling fidelity. None of this is amenable to simple

hand calculation.

Supersonic Inlet

Structures

These are an even more difficult problem, as the inner surfaces are (1) blocked from

viewing the external environment for radiational cooling, and (2) are exposed to edge-of-boundary layer

conditions that are very far indeed from freestream conditions.

Subscribe to:

Posts (Atom)